WHITEPAPERS

From Sensors to ChatGPT: How to Unlock Connectivity and Automation

In this guide, we explore industrial digitization, connectivity patterns, analytics, AI, and Large Language Models (LLMs), with clear deployment examples.

Imagine a modern factory as a grand orchestra. Each machine, sensor, and process plays its part, contributing to the overall harmony. However, just like an orchestra without a conductor, these components can only achieve their full potential with coordination and guidance. This is where industrial digitization steps in as the maestro, orchestrating the various elements to create a symphony of efficiency, productivity, and innovation. At Very, we’re here to help you conduct this symphony, ensuring every component contributes to a harmonious and efficient whole.

In this comprehensive guide, we will explore the importance of industrial digitization, outline standard patterns to rapidly bring connectivity to your processes and assets, delve into the analytics journey that follows once your data pipelines and storage are in place, demystify the buzz around AI and Large Language Models (LLMs), and present straightforward examples of deploying industrial LLMs.

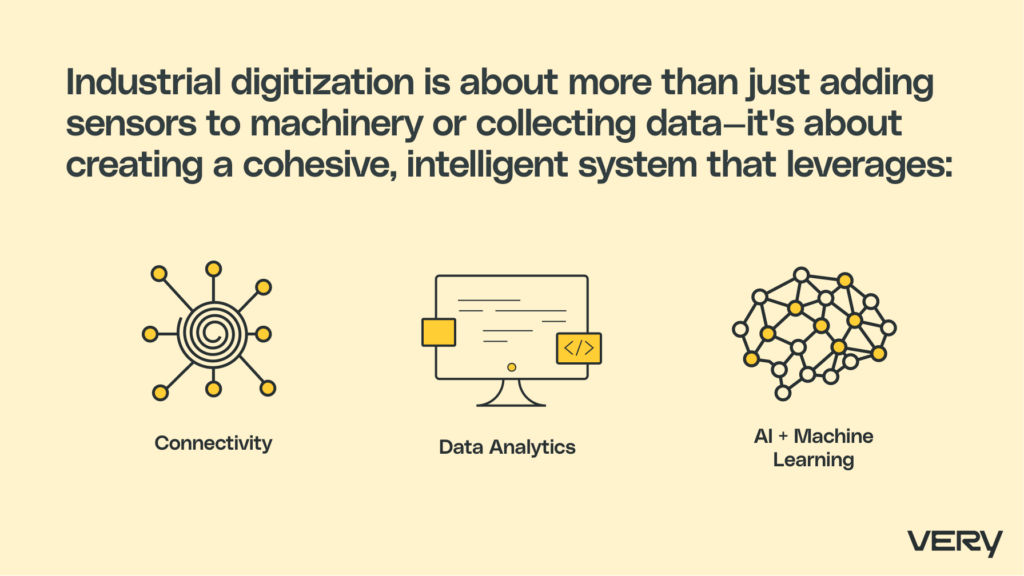

Importance of Industrial Digitization

Industrial digitization is about more than just adding sensors to machinery or collecting data—it’s about creating a cohesive, intelligent system that leverages connectivity, data analytics, and advanced technologies like AI and machine learning to optimize operations, improve decision-making, and drive innovation. For manufacturers, this means integrating diverse processes such as material handling, machining, and robotic assembly into a unified digital infrastructure.

The goal is to create a seamless flow of information from the shop floor to the top floor. By connecting telemetry from these processes to the cloud and combining it with adjacent systems, businesses can provide a unified window into the data and insights that can be leveraged by users at local facilities, offices worldwide, and even stakeholders on the move through mobile devices. This connectivity future-proofs businesses, enabling them to harness the power of current and future digital innovations.

Unlocking the Promise of AI Through Connectivity

Establishing connectivity in your processes is crucial to unlocking AI’s promise. The journey of industrial digitization begins with adopting standard patterns across four core practices: product and interface design, hardware and firmware development, software and digital infrastructure, and data solutions, including AI and machine learning. These practices collectively form the foundation needed to deploy technologies from sensors to ChatGPT and beyond.

Build IoT Value

The journey begins at the base of the IoT value pyramid, which consists of traditional processes and assets you wish to automate, optimize, and leverage. The next step is to install devices that sense the state of your processes. Following this, local connectivity and edge computing are deployed, providing localized value. However, this value is greatly amplified when these devices are connected to the cloud, allowing data from across the organization to be pooled together.

In addition to enterprise data, analytics, visualizations, and machine learning are deployed. As you progress up the value chain, your team’s focus and skill set must shift from hardware and local assets to software and data science. Your perspective must also shift from a local and siloed to a broader one that incorporates processes and data from across the organization.

Establish Connectivity Pattern

While each implementation is unique, a standard Node-Gateway-Cloud pattern has been developed to rapidly inject connectivity. The nodes are low-powered devices attached to the assets, deployed in high numbers across a facility. These nodes are typically custom hardware designed to reduce unit cost and meet specific demands. The Gateway, often off-the-shelf hardware, connects the nodes to the cloud and can process data and execute real-time analytics.

Determining where computation should occur is an interesting trade-off. Computation closer to local assets minimizes latency, cloud and communication costs, and data exposure risks but increases hardware and firmware management costs and decreases system visibility. This visibility is crucial for continued machine learning development.

Another important component of the local system is the mobile application, which simplifies device onboarding and allows technicians to troubleshoot the system. The cloud, while depicted as a single system, often consists of a collection of platforms managing firmware, primary data processing, and AI platforms. Regardless, the cloud must communicate with the devices, react to predefined events, store data, and manage devices. It also needs an API to adjacent systems like Product Lifecycle Management Systems and Job Tracking Systems.

The Analytics Journey

When it comes to developing an AI platform, we follow the mantra of “crawl-walk-run.” Before implementing advanced AI algorithms, it is essential to start with the fundamentals and then progress systematically. The analytics journey can be framed using four levels:

- Descriptive Analytics: At this level, we see what devices are connected, the associated telemetry, and basic statistical analysis for anomaly detection.

- Diagnostic Analytics: As data volume and variety grow, ad-hoc investigations and multi-factor analysis are performed to understand why things happened.

- Predictive Analytics: Moving from reactive to proactive, machine learning is deployed to predict future events, such as when maintenance is needed to prevent failures.

- Prescriptive Analytics: Using advanced algorithms like Reinforcement Learning, actions are prescribed to maximize objectives such as reducing costs or improving key performance indicators.

By systematically moving up these four levels, businesses can continually and rapidly provide value as their data grows and their algorithms mature.

Analytics & Visualization Platform

In the spirit of crawl-walk-run, it is recommended that an analytics and visualization platform be deployed before pursuing machine learning. This approach allows for rapid deployment and the generation of real value from the first two levels of analytics: descriptive and diagnostic. With Infrastructure-as-Code, these patterns can be reused, rapidly deployed, tested, and re-architected.

Growing into an AI Platform

Once the analytics and visualization platform is in place, data begins flowing into the system, allowing for the development of initial machine learning algorithms. This process involves continually collecting and processing training data, training new models, and monitoring their performance to determine when updates are necessary.

Demystifying Generative AI, Large Language Models, and ChatGPT

With the foundation of connectivity and an AI platform in place, it’s time to turn to Generative AI, Large Language Models (LLMs), and ChatGPT. Initially, it might be challenging to see how these technologies fit into the industrial automation landscape. However, like mobile technology, they significantly amplify system functionality, making systems without Generative AI seem outdated.

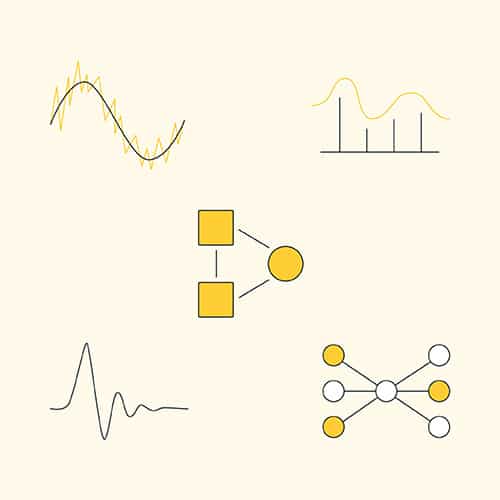

Related Ecosystem

The AI ecosystem is vast and complex, encompassing numerous overlapping terminologies and technologies. At its core, AI includes machine learning, which itself has several branches, including supervised learning, unsupervised learning, and reinforcement learning. While there are many traditional machine learning algorithms, Artificial Neural Networks (ANNs), particularly deep learning, have captured much attention due to their flexibility and power. Large Language Models, a subset of deep learning, are specifically designed to generate new text responses.

Three Types of LLMs

LLMs can be categorized into three main types based on how they bring value: General, Domain Aware, and Domain Constrained. Let’s take a more detailed look at each:

General LLM

General LLMs, such as those with over a trillion parameters trained on vast amounts of text, are incredibly flexible. They can perform a wide range of tasks, from answering questions to summarizing documents and translating languages. However, their flexibility and extensive training data can sometimes result in inaccurate or offensive responses.

Domain Aware LLM

Domain Aware LLMs focus on a specific application or domain, increasing the relevance of responses and enhancing the user experience. These models are typically fine-tuned from existing foundational models using domain-specific text and augmented with prompt engineering.

Domain Constrained LLMs

Domain-constrained LLMs extend domain awareness by limiting responses to a predefined set of information. This approach ensures accuracy and reliability, particularly in industrial automation, where incorrect responses can have significant consequences. These models often use a Retrieval Augmented Generation (RAG) architecture backed by proprietary data storage and high-performance retrieval systems.

Examples of Industrial LLMs

Now, let’s explore two implementations of industrial LLMs to see how they can be deployed effectively.

Knowledge-Based Question Answering

A Knowledge-Based Question Answering (KBQA) system is an excellent example of a domain-constrained LLM that leverages a generalized RAG to rapidly deploy enterprise data. Imagine a technician asking for help with an issue in natural language, receiving accurate and referenced responses instead of manually searching through the company intranet. This system generates custom responses for each question, maps text content to vector spaces for semantic search, and tailors responses to the individual user based on their group, title, or current work assignment.

KBQA for Data Analytics and Visualization

Extending the KBQA system to include live telemetry and on-demand visualizations can significantly enhance its value. Task-oriented LLM agents enable users from the shop floor to the boardroom to create custom visualizations based on the latest data. These agents can perform complex sub-tasks, such as generating SQL and Python code for data extraction and visualization. Users can refine visualizations through additional questions, and the process can learn and improve over time.

Additional Capabilities of LLMs

Beyond industrial automation, LLMs offer several popular capabilities that can benefit organizations. These include language translation, content generation for sales and marketing, customer-facing agent assistants, and document summarization and analysis. While not core to industrial automation, these capabilities can add significant value to various departments within an organization.

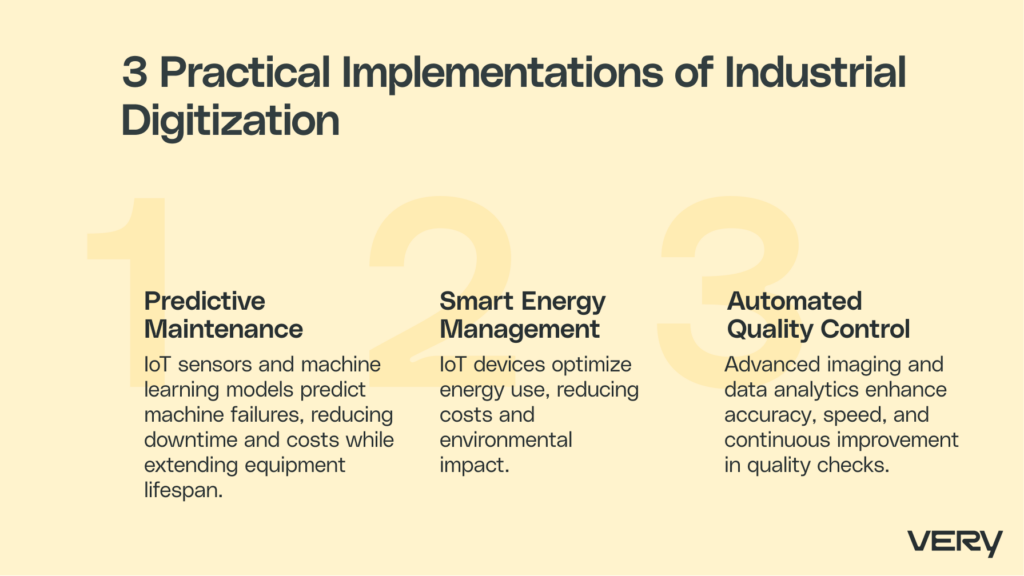

3 Practical Implementations of Industrial Digitization

Understanding the theoretical framework is essential, but seeing real-world applications solidifies the value of industrial digitization. Let’s delve into some practical implementations and real-world examples that demonstrate these technologies’ significant impact.

Predictive Maintenance in Manufacturing

In a manufacturing setup, machines are the lifeblood of production. Unexpected downtimes can lead to significant financial losses and disruptions in the supply chain. Predictive maintenance leverages IoT sensors and data analytics to predict machine failures before they occur.

Implementation

Sensors are installed on critical machinery to monitor parameters such as vibration, temperature, and noise levels. This data is continuously collected and analyzed in real time. Machine learning models trained on historical data identify patterns that precede failures, triggering maintenance alerts before a breakdown occurs.

Benefits

- Reduced Downtime: Predictive maintenance significantly reduces unexpected downtimes, ensuring smoother production processes.

- Cost Savings: By addressing issues before they escalate, companies save on repair costs and avoid the financial impact of halted production.

- Extended Equipment Lifespan: Regular maintenance based on actual machine condition extends the lifespan of equipment, maximizing return on investment.

Smart Energy Management

Energy consumption is a significant operational cost for manufacturing facilities. Smart energy management systems optimize energy use, reducing costs and environmental impact.

Implementation

IoT devices monitor energy consumption across the facility, from lighting to heavy machinery. Data is analyzed to identify inefficiencies and peak usage times. Automated systems adjust energy use based on real-time data, such as dimming lights during low activity periods or optimizing machine operations for off-peak hours.

Benefits

- Cost Reduction: Optimized energy use translates to lower utility bills.

- Sustainability: Reduced energy consumption decreases the facility’s carbon footprint, contributing to corporate sustainability goals.

- Regulatory Compliance: Smart energy management helps meet regulatory requirements for energy efficiency and emissions.

Quality Control and Assurance

Ensuring product quality is paramount in manufacturing. Traditional quality control methods can be time-consuming and prone to human error. Industrial digitization offers automated quality control through advanced imaging and data analytics.

Implementation

High-resolution cameras and sensors inspect products on the production line, capturing detailed images and measurements. Machine learning algorithms analyze these data points to detect defects, deviations, and non-conformities in real time.

Benefits

- Enhanced Accuracy: Automated systems provide consistent and precise quality checks, reducing the risk of defects reaching customers.

- Increased Throughput: Faster inspection processes accelerate production rates without compromising quality.

- Data-Driven Improvements: Detailed data from quality checks enables continuous improvement in production processes and product design.

Challenges and Considerations in Industrial Digitization

While the benefits of industrial digitization are substantial, implementing these technologies comes with challenges that must be addressed to ensure success.

Data Security and Privacy

With increased connectivity comes the risk of cyber threats. Protecting sensitive data and maintaining privacy is paramount.

Strategies

- Robust Security Protocols: Implementing strong encryption, secure authentication methods, and regular security audits.

- Compliance: Adhering to industry standards and regulations for data protection and privacy.

- Training: Educating employees about cybersecurity best practices and potential threats.

Integration with Legacy Systems

Many manufacturing facilities operate with legacy systems that are not inherently designed for digital integration.

Strategies

- Gradual Integration: Phasing in digital technologies while maintaining legacy systems ensures smooth transitions and minimizes disruptions.

- Interoperability Solutions: Utilizing middleware and APIs to bridge the gap between new digital systems and existing infrastructure.

- Custom Solutions: Developing bespoke solutions tailored to the specific needs and constraints of legacy systems.

The Future of Industrial Digitization

Industrial digitization is continuous, with ongoing advancements in technology paving the way for new opportunities and innovations.

Edge Computing

Edge computing, where data processing occurs closer to the source, reduces latency and enhances real-time decision-making capabilities.

Benefits

- Improved Response Times: Immediate data processing leads to faster responses and actions.

- Reduced Bandwidth: Less data needs to be transmitted to central servers, lowering bandwidth requirements and costs.

- Enhanced Privacy: Localized data processing reduces the risk of data breaches during transmission.

Digital Twins

Digital twins, virtual replicas of physical assets, provide a powerful tool for simulation, monitoring, and optimization.

Applications

- Predictive Maintenance: Digital twins simulate different scenarios to predict and prevent equipment failures.

- Process Optimization: Virtual testing of process changes before implementation minimizes risks and optimizes outcomes.

- Training and Simulation: Digital twins offer a safe and realistic environment for employee training and skill development.

Final Thoughts

Industrial digitization represents a transformative shift in manufacturing, akin to the leap from manual craftsmanship to assembly-line production. By embracing connectivity, data analytics, and more, manufacturers can unlock unprecedented levels of efficiency, productivity, and innovation.

Though it comes with its own set of challenges, such as data security and legacy system integration, the rewards are substantial. Predictive maintenance reduces downtime, smart energy management cuts costs, and automated quality control enhances product reliability.

Looking ahead, the future holds even greater promise with edge computing, and digital twins are poised to drive further advancements. Industrial digitization is not just a trend but a fundamental evolution in how we manufacture and operate, offering a pathway to sustainable growth and competitive advantage in a rapidly changing world.

Author: Daniel Fudge

Senior Director of Software Engineering & Data Solutions

Daniel leads the data science and AI discipline at Very. Driven by ownership, clarity and purpose, Daniel works to nurture an environment of excellence among Very’s machine learning and data engineering experts — empowering his team to deliver powerful business value to clients.