WHITEPAPERS

Unlocking the Power of IoT Analytics: From Data Collection to AI-Driven Insights

This whitepaper explores the four levels of analytics, the technical requirements for a robust analytics and AI platform, and examines two key IoT application patterns: low-power telemetry and heavy AI workloads.

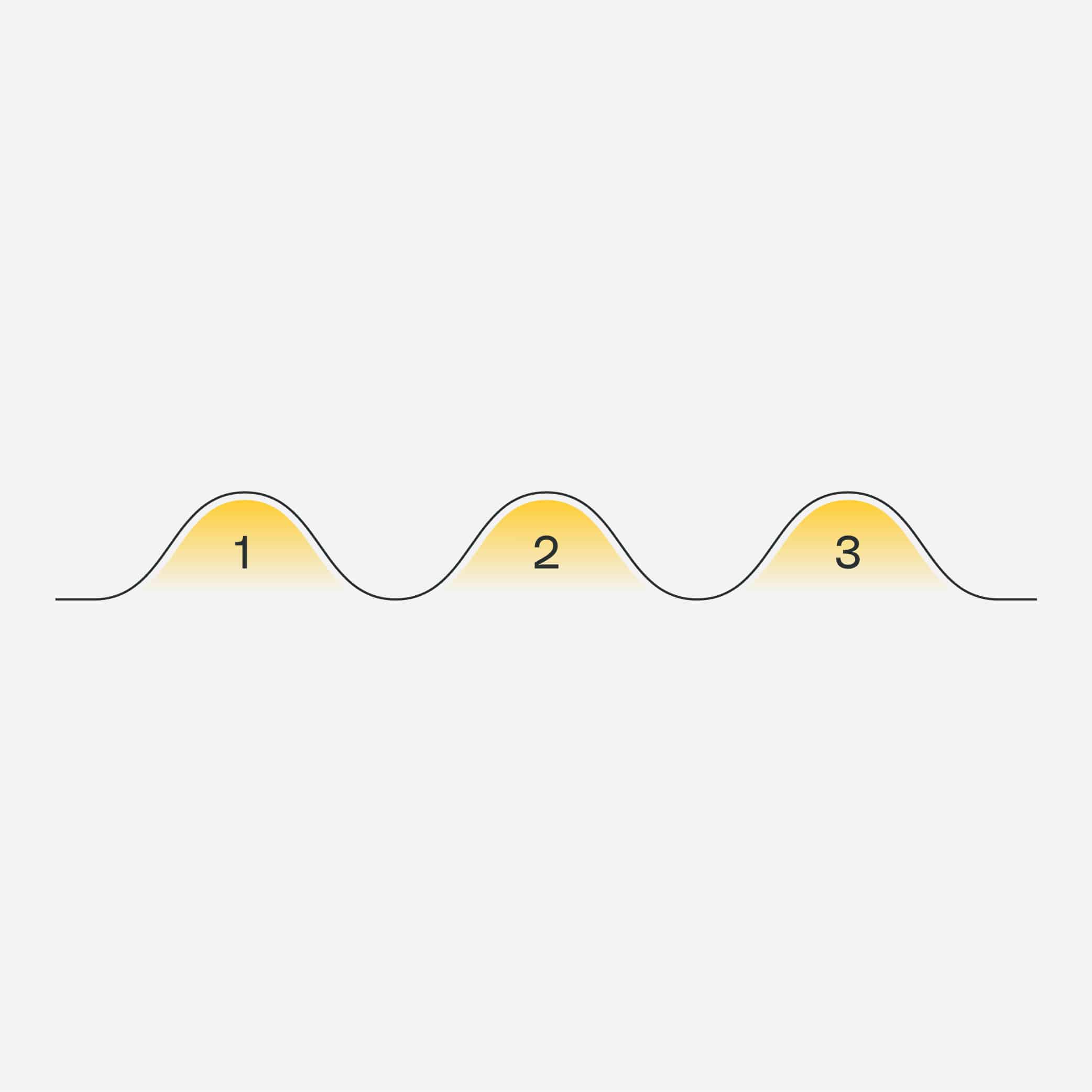

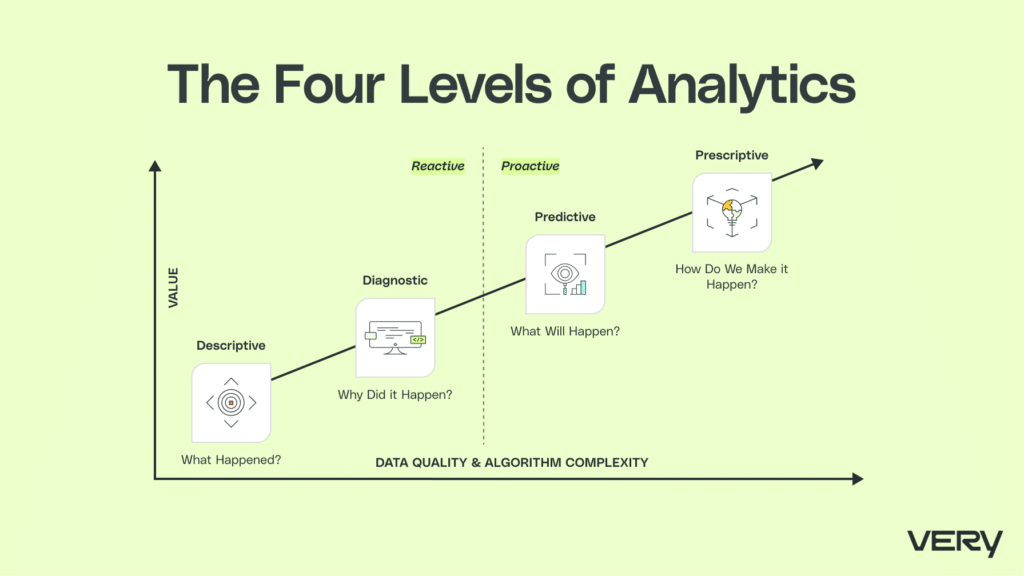

As IoT technology continues to advance, so too does the need for effective analytics and digital infrastructure to process the massive volumes of data these systems generate. At Very, we categorize the journey through analytics into four levels: descriptive, diagnostic, predictive, and prescriptive. Each stage offers increasing value as organizations evolve from simply understanding their operations to making informed, automated decisions that optimize processes. Alongside this analytics progression, companies must also navigate evolving infrastructure needs—from low-power telemetry setups to systems capable of handling heavy AI workloads.

This whitepaper explores the four levels of analytics, explores the technical requirements for a robust analytics and AI platform, and examines two key IoT application patterns: low-power telemetry and heavy AI workloads.

The Four Levels of Analytics: Building Value Through Data Maturity

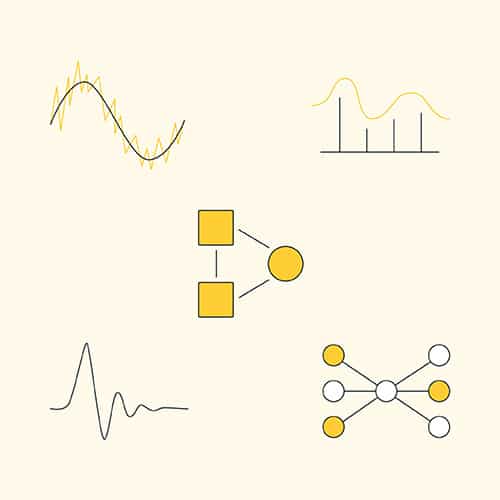

In IoT, data analytics doesn’t just provide insights—it guides strategic decisions. We frame the analytics journey in four stages:

- Descriptive Analytics: This initial stage of analytics reveals “what happened.” Descriptive insights allow businesses to see connected devices, their telemetry, and patterns within data through standard statistical analysis. Companies gain visibility into operational data, laying the groundwork for more advanced capabilities.

- Diagnostic Analytics: With growing data volume and variety, businesses move to the diagnostic phase to understand “why things happened.” Multi-factor analyses and visualizations offer in-depth insights into the root causes of anomalies and other patterns. This phase builds the context needed to respond intelligently to data trends.

- Predictive Analytics: Here, analytics shift from reactive to proactive. Machine learning models predict future events, like equipment failure, enabling predictive maintenance and advanced anomaly detection. This foresight helps organizations prevent costly downtime, increasing system reliability and efficiency.

- Prescriptive Analytics: This final phase involves more advanced machine learning models and algorithms, such as reinforcement learning, to suggest the best actions for reaching business goals, like minimizing costs or improving key performance indicators (KPIs). By applying prescriptive analytics, companies move beyond understanding and anticipating outcomes—they optimize their processes automatically to maximize impact.

Progressing through these stages equips organizations to derive increasing value from their IoT data and enhance the sophistication of their decision-making.

The Foundation of an Analytics & Visualization Platform

For many organizations, the first step in this analytics journey is deploying an analytics and visualization platform. Following a “crawl-walk-run” approach, an analytics platform establishes a structured foundation for descriptive and diagnostic analytics before jumping into machine learning. This platform enables rapid deployment, generates immediate value, and utilizes Infrastructure-as-Code to allow scalability, testing, and re-architecture as necessary.

By prioritizing analytics and visualization, companies can glean significant insights early on, allowing them to address immediate needs while preparing the infrastructure for more advanced AI functionalities.

Growing Into an AI Platform: Enabling Continuous Learning

Once data flows into the analytics and visualization platform, companies can begin developing and refining machine learning models. An AI platform must support essential machine learning operations (MLOps) capabilities, including data collection, model training, performance monitoring, and model updating. This infrastructure is crucial for managing an evolving suite of models that keep pace with changing business requirements.

A strong MLOps approach enables companies to deploy models effectively and adjust them as needed, whether by gathering additional training data or deploying new algorithms. By building this adaptability into the AI platform, businesses can respond to new data patterns or changing conditions with agility.

IoT Application Patterns: Low-Power Telemetry and Heavy AI Workloads

Different IoT applications come with unique requirements that shape the infrastructure and analytics needed for success. Let’s explore two common patterns: low-power telemetry and heavy AI workloads.

Low-Power Telemetry & Control: Efficiency in Industrial and Commercial Applications

Many IoT applications, especially in industrial or commercial settings, require low-power telemetry to monitor distributed devices across large areas. This pattern’s key features include:

- Battery-powered nodes that operate independently in challenging wireless communication environments.

- Environmental monitoring and transmission of device telemetry.

- Localized event detection and control with minimal data transmission to save power.

For applications with high levels of radio frequency (RF) interference, options like LoRa (a low-power, long-range communication technology) provide attractive solutions. Although LoRa’s low bandwidth restricts raw data transmission, edge computing can overcome this limitation by processing data locally at the node level. By only transmitting essential metrics, edge computing significantly conserves energy and reduces communication overhead.

This model also allows control logic to be implemented at the node, reducing latency and maintaining control over connected devices even when connectivity to the gateway or cloud is interrupted. However, edge computing at the node isn’t without trade-offs—it may increase costs and affect battery life. To further optimize efficiency, gateway devices can also perform real-time data processing, lowering latency and cloud communication costs.

Two primary cloud platforms support low-power telemetry applications:

- System Management Platform: This user interface for internal and external teams manages device solutions and their deployment.

- Device Management Platform: Although often overlooked, this platform is crucial for ensuring device health and security, allowing continuous firmware updates and device observability and monitoring.

Heavy AI Workloads: Optimized Infrastructure for Real-Time Processing

Some IoT applications demand heavy AI workloads with low latency and intensive processing requirements. Common examples include computer vision and automated speech recognition (ASR), where fast, accurate processing is paramount. This pattern is particularly well-suited for applications where data security and privacy are concerns since live data often remains within local systems.

Consider an ASR use case where nodes analyze audio data to detect wake words and identify commands. The nodes use digital signal processors to convert raw audio signals into features, which are then processed by neural networks. For instance, a node might detect the wake word “Hello Very” and activate further processing. Although nodes in this scenario are powerful, they are still relatively low-powered and need a central processor for intensive model operations.

The central processor, typically an embedded Linux system with GPU-like hardware, executes deeper learning models to interpret user commands with sub-second latency. The processor then converts the commands into machine actions to be sent to the end devices (e.g., building management systems). In this setup, faster connectivity such as Ethernet or WiFi replaces LoRa, as low latency and high bandwidth are essential.

While this system can function without cloud connectivity, cloud access remains valuable for model evolution. For example, when a model encounters low-confidence predictions, it can send anonymized data back to the AI platform for training. This iterative data flow enhances model accuracy over time, establishing a feedback loop for continuous model improvement.

The AI platform processes incoming training data, refines algorithms, and manages model deployment. Although a simplistic approach could involve handling all inference tasks in the cloud, moving critical deep learning operations to the edge reduces costs, shortens response times, and enhances data security.

A Framework for Strategic IoT Infrastructure Development

As businesses advance in their IoT journey, understanding and adapting to these evolving infrastructure requirements is essential. A well-defined analytics platform provides the baseline for data-driven decisions, while the transition to an AI platform enables the deployment of complex machine learning models that generate valuable insights.

From low-power telemetry in industrial settings to high-performance AI workloads, each IoT application pattern requires tailored infrastructure to maximize efficiency and intelligence. Very’s expertise in building scalable, adaptable IoT systems helps companies navigate this evolving landscape, empowering them to achieve strategic insights, efficiency gains, and long-term innovation.

By embracing these frameworks and infrastructure patterns, organizations can better leverage IoT’s transformative potential, making data a core component of their operational and strategic decision-making.

Author: Daniel Fudge

Senior Director of Software Engineering & Data Solutions

Daniel leads the data science and AI discipline at Very. Driven by ownership, clarity and purpose, Daniel works to nurture an environment of excellence among Very’s machine learning and data engineering experts — empowering his team to deliver powerful business value to clients.